I’ve been using Midjourney daily since the open beta first came out.

What does that mean? Well, almost 10,000 images, countless hours of fiddling, playing, and working, and of course, a crapload of encounters with Midjourney’s almost-ban hammer.

In case you don’t know, Midjourney is an AI art generating software that has its main interface on Discord, where you can simply type “/imagine” and the world becomes your artistic oyster.

Now, with great opportunity for trolling comes a great number of trolls, and Midjourney was no exception. Up until May 2023, Midjourney had two major trolling problems.

Midjourney’s Abuse Problem

First, they had a free version of their software, where you could create something like 25 images with each new account, the caveat being you couldn’t use the images commercially (although using any of their images commercially is currently a topic going through the courts).

The other part of having a free version, is, of course, the vast potential for abuse it opens up.

Ostensibly, someone recorded all of the not-too-many processes of opening up the various accounts needed to have a “new” Midjourney user, automated it, and helped spread it. Eventually the company got sick of all the people abusing their system to the point they removed the free trial entirely. Because at the end of the day, all that computing power costs money.

But what were they using it for?

Who knows, but I would guess troll farms were one of their many customers. After all, didn’t you see the fake Trump arrest thing? Millions of people did, and most of them probably believed it at first:

However, such heavy-handed moderation curtails creative liberty. Think the word “arrest”, which was barred following the Trump event, despite its applicability in numerous contexts, including valid ones.

There’s at least 20 movies with the word “arrest” in them. There’s probably more TV shows, let alone episodes. What about quotes? What about describing news events? How about all of history?

Clearly, this ever-expanding list of banned words in Midjourney was becoming more of an issue for its millions of users, myself included.

I like to make a lot of satire and humorous articles for various projects I’m involved in (and for the enjoyment of life), and believe me, the number of words you couldn’t use was getting larger and larger.

Sure, you could find a way around the words most of the time. My favorite for showing a historical event where someone was injured and possibly— gasp — bleeding, was a constant battle of trying to find ways around the offending word. Which, of course, isn’t offensive to 99% of the world. Especially when you’re just trying to make silly little cartoons to share with your friends and the 2 people that read my blog.

But according to their T&C’s, that could get you banned. So, user beware. Of course, you wouldn’t dare put “melting red jelly” or “bubbled dark red liquid” in place, even if that would get around the censors, right?

And whenever a popular platform starts limiting what people can do, lists started popping up all over the internet keeping track of the banned words. The problem is, no one person could truly find that out (they’d be banned from Midjourney), so a lot of the compiled lists are essentially full of assumptions and fake GPT generated lists.

They might be completely right, they might be completely fake, but who’s going to test it? No one could, except the employees at Midjourney who likely used those lists to expand their own banned words list, in a never ending banned words version of Inception.

You can see the many problems this archaic bias-ful system posed.

How Does Midjourney Banned Words System Work?

- Write a banned word in your prompt, get a warning.

- Within a certain time frame, keep getting warned for repeated use of any banned words.

- After a certain number of attempts within a certain time frame, get an account suspension (I believe it was about 5 banned words in perhaps an hour, and it led to a 3 hours suspension if my memory serves me correct.)

This is still true for any Midjourney version before the latest MJ v5 as it’s called, but I assume it will be eliminated at some point. This method lacked the ability to understand words in context, resulting in a less nuanced and efficient approach to content moderation.

So what did Midjourney do to fix it?

How Does Midjourney Ban Words Now?

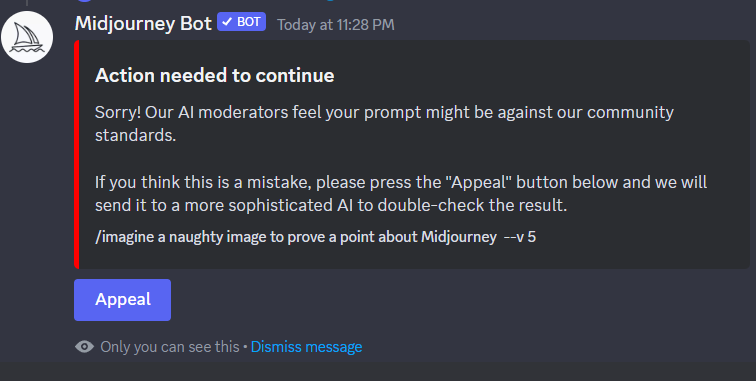

The new AI moderator system boasts a smarter and more nuanced approach, employing a two-layered process to scan and evaluate queries.

Initially, a fast AI scans all queries; if it deems a prompt unsuitable, users can click an “Appeal” button to escalate the query to a larger, more powerful AI for further evaluation.

The full statement is:

“Action need to continue. Sorry! Our AI moderators feel your prompt might be against our community standards. If you think this is a mistake, please press the “Appeal” button below and we will send it to a more sophisticated AI to double-check the result.”

Now two things can happen.

#1. If the appeal is successful, which most of my flagged prompts seem to be, then it says it’s fine and starts making the images from that prompt.

#2. If the appeal is unsuccessful, AKA another, “more advanced” robot determines if the image you tried to make can be considered offensive or not PG-13, then you can try appealing to the moderation staff at Midjourney. I’ve only seen this triggered twice now, and neither time did I have an interest in poking the bear, so to speak.

According to Midjourney, with this two-layer system, the developers aim to provide an almost 100% chance of allowing innocent prompts to pass through, greatly enhancing the user experience. However, due to the cost of the larger AI, the number of appeals per user is currently limited to five per 24 hours, with adjustments based on traffic volume.

So, essentially, you can appeal to the first robot all you want, but if you want to appeal to the second robot, which uses the “smarter” AI, you’re limited to trying that 5 times in 24 hours. That also assumes you can only send 5 appeals to actual real-live human beings at Midjourney, too.

What I Think Midjourney is Doing With Their Auto-Moderation

Here’s what I think Midjourney is doing with the new system, but I have no specific proof.

- I think they are using OpenAI’s GPT-4 API.

- They likely have a long prompt saved where it probably details a bunch of images they don’t want to be created by the platform. It probably incorporates their giant list of banned words, as well.

- The first appeal layer, which is talked about as sort of a dumb AI, is likely using a simplified prompt and GPT-3 or GPT-3.5, where the cost per entry is vastly reduced. They could even be using the chatbot version, where, at $20 a month, the entries could be unlimited. (I’d assume Midjourney is getting millions of queries a day, of which a good percentage of them are flagged.)

- The second appeal layer, the smarter AI, is likely the GPT-4 API, where the cost is higher, and thus why Midjourney has talked about wanting to limit the frequency and thus cost.

My point? They officially outline what isn’t allowed, and that all images should generally fall under the PG-13 label, plus whatever they think China’s president counts ass. And all of this is being conducted by AI bots, which are themselves full of biases based on how they were created and refined over time.

Kind of a scary prospect straight out of 1984, but then again, maybe it’s time to update that book for 2024, anyway?

The Problems With Midjourney Using AI for its Moderation

- False positives: The AI might mistakenly block innocent prompts due to a lack of understanding of context or cultural nuances.

- False negatives: Harmful or inappropriate content might slip through the system, exposing users to unwanted material.

- Limited appeals: The restriction on the number of appeals per user could hinder the submission of legitimate prompts.

- Unintended bias: The AI system might inadvertently develop biases based on the data it has been trained on, leading to unfair moderation.

- User frustration: Frequent false positives or negatives could lead to dissatisfaction among users, potentially causing a decline in user engagement or platform abandonment.

- Exclusion of certain perspectives: If the AI system displays unintended bias, it may result in the exclusion of specific viewpoints, harming the diversity of content and discussions on the platform.

- Overreliance on AI: As the new moderation system gains popularity, there might be a tendency to rely too heavily on the AI, resulting in less human oversight and intervention.

I’m not exactly sure how I feel about this new implementation, or whether its better or worse than the previous system. Whatever it is, I think limiting all images to whatever an AI, with its subjective inputs from Midjourney, feels doesn’t conform to PG-13 standards is a mistake.

That being said, I don’t know a better solution other than relaxing what the inputted constraints are in the first place. Life, history, and humor don’t abide by PG-13, I’m not sure why our AI-imagined world has to be, either.

In case you want to know more about what isn’t acceptable here is a reworked version of Midjourney’s official stance on content moderation.

What Isn’t Allowed on Midjourney?

Here’s a shortened list of what Midjourney feels isn’t appropriate on their platform, AKA their community guidelines:

- Keep content PG-13 for an inclusive community

- Be respectful; no aggressive or abusive content

- No adult content, gore, or visually shocking material

- Ask permission before reposting others’ creations

- Share responsibly outside the platform

- Violations may result in bans

- Gore: detached body parts, cannibalism, blood, violence, deformities, etc.

- NSFW/Adult content: nudity, sexual organs, explicit imagery, fetishes, etc.

- Offensive content: racist, homophobic, derogatory, or disturbing material

And here’s a reworked list of why they feel that way:

- Balancing creative expression with community safety

- Guidelines aim for inclusivity, respect, and positive user experience

- Encourage responsible use of new, participatory technology

- Prevent harmful impact on public perception of the platform

- Banned keywords: minimize offensive or harmful content

- Banned words may seem harmless but can produce violating content

- AI limitations: doesn’t understand context like humans do

- Example: peach emoji can be innocent or NSFW

- Repeated violations may lead to warnings or bans

- Goal: foster a welcoming, supportive community for all

J.J. Pryor

You might also be interested in other Midjourney posts about:

- The ongoing Midjourney lawsuit

- Is Artificial Intelligence bad for creators?

- How to fix Midjourney errors

- How to use Midjourney commands

- Everything you wanted to know about Midjourney